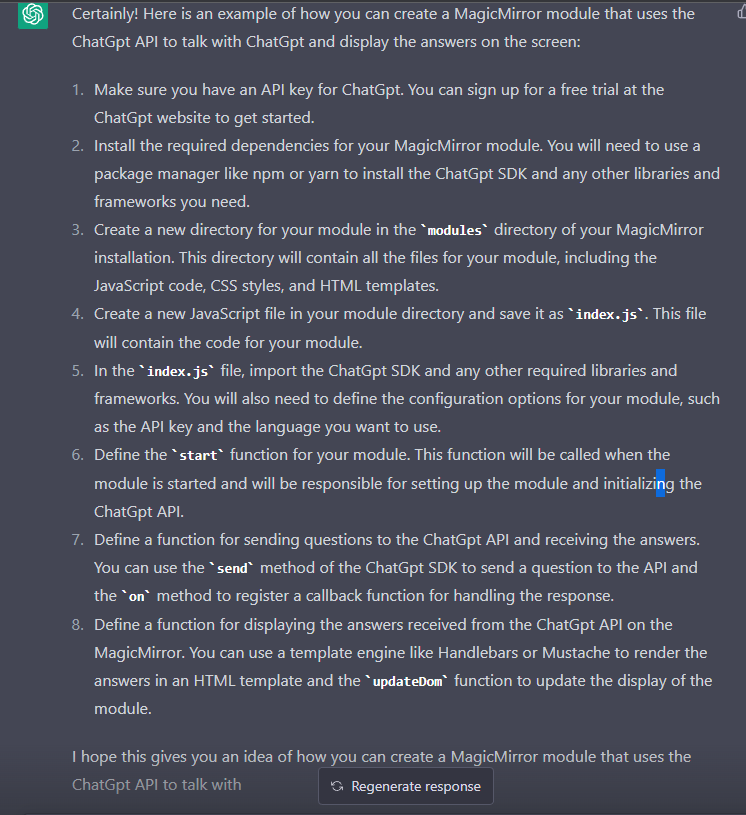

Read the statement by Michael Teeuw here.

ChatGpt intergration

-

@SILLEN-0 the error says that at the time of trying to to assign the apiKey to the openai object, the openai object is null (0)

TypeError: Cannot set properties of undefined (setting 'apiKey') 0|MagicMirror | at /home/pi/MagicMirror/modules/chatgpt/node_helper.js:12:27‘undefined’ here is openai,

so, I see you print the object just after the require() at the top

const openai = require("openai").default; console.log(openai);and ‘assume’ that you looked at the output of npm start (where console.log messages go) and are satisfied that the require(‘openai’) worked

so now to check again later

so I would add and another console.log(openai)

after the getResponse:getResponse: function(question) { // here .. is the openai object good (not null) here? return new Promise((resolve, reject) => { openai.apiKey = "XXXXXXXXXXXXXX"; // this is the statement that failed -

HOLY SHIT I GOT IT TOO WORK!. ok i need to calm down i am so happy right now. so turn out i was just trying too call the everything with openai.apiKey instead of just apiKey and then i had too do somthing else i dont even remember what i did but now i got it too work and it displays on the magicmirror. but now comes the hard part. if you look in the code there is a variabele named question that the prompt uses and send too the api. and i am no expert but can you have the variable question be defined with some kind of speech too text thing?

-

@SILLEN-0 said in ChatGpt intergration:

and i am no expert but can you have the variable question be defined with some kind of speech too text thing?

i don’t know what you mean…

unless u want to capture speech and convert that to the text for openai

welcome to the problem I have highlighted since the beginning… there is no GOOD speech capture library, and nothing built in.

this is why the MMM-GoogleAssistant provides mechanisms (recipe) to use the captured text for non- google uses.

MMM-Voice uses the pocketsphinx lib, from carnegie mellon, I think this one is terrible for me, <60% accurate and I have to keep saying it over and over.

GA and most others use cloud based services (none free)

but put a filter (hotword, like ok google, or alexa) in front to keep the cost down, not convert everything.the other mirror platform I support , smart-mirror , is voice based, so your ‘plugin’ can get the text from the speech. not compatible with MM.

-

i have MMM-googleassistant and i have used the recipes for some other stuff but could you get that too work with chatgpt. saying somthing like "ok-google chatgpt followed by the question you want too ask and then it takes the question makes it a variable or somthing that the prompt can read and then refresh the answer on the magicmirror. also what do you mean by (none free) MMM-google assistant is free. but i think it wouldnt be a big issue having too pay for chat gpt as in all of my testing and making api calls i have only paid 0.09 usd

-

@SILLEN-0 so to use ga recipe it would send a notification and your module side would get that and send that text to node_helper… rest is the same

-

@SILLEN-0 meaning of life. I assume service has blocked killer questions

-

@sdetweil any idea on how to do that? Also i fixed it i was just stupid i changed the default question if I had nothing else in the config.js but in there i had the question still set too what’s the weather like and when i changed that everything worked

-

@SILLEN-0 how to do what?

-

@sdetweil how would you make the recipie part and what to have as a command like how would you tell the recipie too send all text after the phrase chatgpt in the notification as question. and recieving that in the chatgpt.js

-

@SILLEN-0 the way modules communicate is thru notifications

sendNotification(someStringvalue, someData)

it’s a broadcast all modules receive it.

they examine the someStringvalue and see if it’s important to them and if so they process the someData., if not then they ignore it. there are hundreds of notifications firing all the time.

so you make a recipe that responds to a voice phrase chatGpt, and the rest is the question

and u have ga send the notification that your module is looking for.you should be able to see the recipe pattern by looking at others that do the same thing.

once your module recognizes the notification string, then it knows the data is the text of the question.

you module already has a receiveNotification function, so adding another compare for the notification string is the big work…

-

@sdetweil yea i guess but how in the world would you make it send just the phrase after chatgpt is said. in other words i have no idea how the recipie file should be coded

-

@SILLEN-0 you said you understood recipe

-

@sdetweil maybe i formulated it bad. i know how the recipie structure should look like and i can make simple recipies but having the notification only send everything after “chatgpt” that i have no idea how too do. as i said i am a beginner in coding and trying my best

-

@SILLEN-0 look at the memo and selfie shoot recipies

memo gets the rest of the words

selfie shoot ‘command’ is to send a notificationwhen the ‘command’ function is invoked it gets params… ie, the rest of the words

-

@sdetweil ok hello. alot of things have happend in the last week. i have got somthing that should be working but i had too fix abunch of errors in the code. but for the last couple of days i have been stuck on this perticular error in my code:

0|MagicMirror | [25.01.2023 21:05.25.175] [LOG] Error in getResponse: RangeError: Maximum call stack size exceeded 0|MagicMirror | at isBinary (/home/pi/MagicMirror/node_modules/socket.io-parser/build/cjs/is-binary.js:22:18) 0|MagicMirror | at hasBinary (/home/pi/MagicMirror/node_modules/socket.io-parser/build/cjs/is-binary.js:40:9) 0|MagicMirror | at hasBinary (/home/pi/MagicMirror/node_modules/socket.io-parser/build/cjs/is-binary.js:49:63) 0|MagicMirror | at hasBinary (/home/pi/MagicMirror/node_modules/socket.io-parser/build/cjs/is-binary.js:49:63) 0|MagicMirror | at hasBinary (/home/pi/MagicMirror/node_modules/socket.io-parser/build/cjs/is-binary.js:49:63) 0|MagicMirror | at hasBinary (/home/pi/MagicMirror/node_modules/socket.io-parser/build/cjs/is-binary.js:49:63) 0|MagicMirror | at hasBinary (/home/pi/MagicMirror/node_modules/socket.io-parser/build/cjs/is-binary.js:49:63) 0|MagicMirror | at hasBinary (/home/pi/MagicMirror/node_modules/socket.io-parser/build/cjs/is-binary.js:49:63) 0|MagicMirror | at hasBinary (/home/pi/MagicMirror/node_modules/socket.io-parser/build/cjs/is-binary.js:49:63) 0|MagicMirror | at hasBinary (/home/pi/MagicMirror/node_modules/socket.io-parser/build/cjs/is-binary.js:49:63)the internet says that it is probobally a part of the code calling itself but i just cant seem too find anything. it may be super clear what the issue is here but i really cant seem too find the cause of the error. help would be greatly apprecieted. here is my code:

chatgpt.js:

Module.register("chatgpt",{ defaults: { question: "What is the weather like today?", }, start: function() { console.log("Starting module: " + this.name); this.question = this.config.question; }, notificationReceived: function(notification, payload) { if(notification === "ASK_QUESTION") { this.question = payload.question; console.log("Received question: " + this.question); this.sendSocketNotification("ASK_QUESTION", this.question); } }, socketNotificationReceived: function(notification, payload) { if(notification === "RESPONSE") { this.answer = payload.answer; console.log("Received answer: " + this.answer); console.log("Calling updateDom"); this.updateDom(); } else { console.log("Unhandled notification: " + notification); console.log("Payload: " + payload); } }, getDom: function() { var wrapper = document.createElement("div"); var question = document.createElement("div"); var answer = document.createElement("div"); if (this.answer) { answer.innerHTML = this.answer; } else { answer.innerHTML = "Ask me something"; } question.innerHTML = this.question; wrapper.appendChild(question); wrapper.appendChild(answer); return wrapper; }, });node_helper.js:

const NodeHelper = require("node_helper"); const { Configuration, OpenAIApi } = require("openai"); module.exports = NodeHelper.create({ start: function() { console.log("Starting node helper for: " + this.name); this.configuration = new Configuration({ apiKey: "XXXXXXXXXXXXXXXXXXXXXXXXXX", }); this.openai = new OpenAIApi(this.configuration); }, // Send a message to the chatGPT API and receive a response getResponse: function(prompt) { return new Promise(async (resolve, reject) => { try { const response = await this.openai.createCompletion({ model: "text-davinci-003", prompt: prompt, temperature: 0.7, }); console.log("Received response from API: ", response); resolve(response); } catch (error) { console.log("Error in getResponse: ", error); reject(error); } }); }, // Handle socket notifications socketNotificationReceived: function(notification, payload) { if (notification === "ASK_QUESTION") { console.log("Received ASK_QUESTION notification with payload: ", payload); this.getResponse(payload) .then((response) => { // create a new object and update the answer property var responsePayload = {answer: response}; console.log("Sending RESPONSE notification with payload: ", responsePayload); this.sendSocketNotification("RESPONSE", responsePayload); }) .catch((error) => { console.log("Error in getResponse: ", error); }); } }, });the api call is being made and everything else is working but as soon as i say “jarvis chatgpt say banana” or any other input i get the error

-

@SILLEN-0 what is the data format of the response?, text, json, ???

you said

‘say banana’

did it return a wav file?

if u add the MMM-Logging module, then u can see all the logs in one place (output of npm start)

socket-io requires a serializable object

says error is in getResponse() function,

so, did your module get multiple requests concurrently and send them on?

I would make the notification string more specific. ‘gpt question’

there are hundreds of notifications going on, possible there was another module sending that same string…

-

@SILLEN-0 also that is the error part of the pm2 logs --lines output

when I am developing

I don’t use pm2,

I just donpm start >somefile.txt 2>&1in the MagicMirror folder

then all the console output is in one file.

adding the logging module will reflect all the modulename.js log statements tooAND you can debug on the mm UI , ctrl-shift-i,

select the sources tab, find your module name.js in the left nav,and you can put stops on lines of code and step thru it

-

@sdetweil hey. I’m sorry for not being able too answer but i have other stuff going on and a math test coming up. The text is sent over as a string and the module does recieved the string and gets a response from the API it is after that the issue is caused. I Google the error and people say that there is probably a loop in the code somewhere. I will try with the debug module when i have time. I really want too get this too work.

-

@SILLEN-0 the data back from the api looks like this

status: 200, statusText: 'OK', headers: { date: 'Sun, 29 Jan 2023 23:56:49 GMT', 'content-type': 'application/json', 'content-length': '343', connection: 'close', 'access-control-allow-origin': '*', 'cache-control': 'no-cache, must-revalidate', 'openai-model': 'text-davinci-003', 'openai-organization': 'user-ieyrbalt3azhd9sd82splktg', 'openai-processing-ms': '2790', 'openai-version': '2020-10-01', 'strict-transport-security': 'max-age=15724800; includeSubDomains', 'x-request-id': 'd341b29147db89ee20886107e22b41a4' }, config: { transitional: [Object], adapter: [Function: httpAdapter], transformRequest: [Array], transformResponse: [Array], timeout: 0, xsrfCookieName: 'XSRF-TOKEN', xsrfHeaderName: 'X-XSRF-TOKEN', maxContentLength: -1, maxBodyLength: -1, validateStatus: [Function: validateStatus], headers: [Object], method: 'post', data: '{"model":"text-davinci-003","prompt":"What is the weather like today?","temperature":0.7}', url: 'https://api.openai.com/v1/completions' }, request: ClientRequest { _events: [Object: null prototype], _eventsCount: 7, _maxListeners: undefined, outputData: [], outputSize: 0, writable: true, destroyed: false, _last: true, chunkedEncoding: false, shouldKeepAlive: false, maxRequestsOnConnectionReached: false, _defaultKeepAlive: true, useChunkedEncodingByDefault: true, sendDate: false, _removedConnection: false, _removedContLen: false, _removedTE: false, _contentLength: null, _hasBody: true, _trailer: '', finished: true, _headerSent: true, _closed: false, socket: [TLSSocket], _header: 'POST /v1/completions HTTP/1.1\r\n' + 'Accept: application/json, text/plain, */*\r\n' + 'Content-Type: application/json\r\n' + 'User-Agent: OpenAI/NodeJS/3.1.0\r\n' + 'Authorization: Bearer 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\r\n' + 'Content-Length: 89\r\n' + 'Host: api.openai.com\r\n' + 'Connection: close\r\n' + '\r\n', _keepAliveTimeout: 0, _onPendingData: [Function: nop], agent: [Agent], socketPath: undefined, method: 'POST', maxHeaderSize: undefined, insecureHTTPParser: undefined, path: '/v1/completions', _ended: true, res: [IncomingMessage], aborted: false, timeoutCb: null, upgradeOrConnect: false, parser: null, maxHeadersCount: null, reusedSocket: false, host: 'api.openai.com', protocol: 'https:', _redirectable: [Writable], [Symbol(kCapture)]: false, [Symbol(kNeedDrain)]: false, [Symbol(corked)]: 0, [Symbol(kOutHeaders)]: [Object: null prototype], [Symbol(kUniqueHeaders)]: null }, data: { id: 'cmpl-6eBpQkPEmW4z1PoxRTTprs8Lhoqn4', object: 'text_completion', created: 1675036608, model: 'text-davinci-003', choices: [Array], usage: [Object] } } answer: { status: 200, statusText: 'OK', headers: { date: 'Sun, 29 Jan 2023 23:56:49 GMT', 'content-type': 'application/json', 'content-length': '343', connection: 'close', 'access-control-allow-origin': '*', 'cache-control': 'no-cache, must-revalidate', 'openai-model': 'text-davinci-003', 'openai-organization': 'user-ieyrbalt3azhd9sd82splktg', 'openai-processing-ms': '2790', 'openai-version': '2020-10-01', 'strict-transport-security': 'max-age=15724800; includeSubDomains', 'x-request-id': 'd341b29147db89ee20886107e22b41a4' }, config: { transitional: [Object], adapter: [Function: httpAdapter], transformRequest: [Array], transformResponse: [Array], timeout: 0, xsrfCookieName: 'XSRF-TOKEN', xsrfHeaderName: 'X-XSRF-TOKEN', maxContentLength: -1, maxBodyLength: -1, validateStatus: [Function: validateStatus], headers: [Object], method: 'post', data: '{"model":"text-davinci-003","prompt":"What is the weather like today?","temperature":0.7}', url: 'https://api.openai.com/v1/completions' }, request: ClientRequest { _events: [Object: null prototype], _eventsCount: 7, _maxListeners: undefined, outputData: [], outputSize: 0, writable: true, destroyed: false, _last: true, chunkedEncoding: false, shouldKeepAlive: false, maxRequestsOnConnectionReached: false, _defaultKeepAlive: true, useChunkedEncodingByDefault: true, sendDate: false, _removedConnection: false, _removedContLen: false, _removedTE: false, _contentLength: null, _hasBody: true, _trailer: '', finished: true, _headerSent: true, _closed: false, socket: [TLSSocket], _header: 'POST /v1/completions HTTP/1.1\r\n' + 'Accept: application/json, text/plain, */*\r\n' + 'Content-Type: application/json\r\n' + 'User-Agent: OpenAI/NodeJS/3.1.0\r\n' + 'Authorization: Bearer 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\r\n' + 'Content-Length: 89\r\n' + 'Host: api.openai.com\r\n' + 'Connection: close\r\n' + '\r\n', _keepAliveTimeout: 0, _onPendingData: [Function: nop], agent: [Agent], socketPath: undefined, method: 'POST', maxHeaderSize: undefined, insecureHTTPParser: undefined, path: '/v1/completions', _ended: true, res: [IncomingMessage], aborted: false, timeoutCb: null, upgradeOrConnect: false, parser: null, maxHeadersCount: null, reusedSocket: false, host: 'api.openai.com', protocol: 'https:', _redirectable: [Writable], [Symbol(kCapture)]: false, [Symbol(kNeedDrain)]: false, [Symbol(corked)]: 0, [Symbol(kOutHeaders)]: [Object: null prototype], [Symbol(kUniqueHeaders)]: null }, data: { id: 'cmpl-6eBpQkPEmW4z1PoxRTTprs8Lhoqn4', object: 'text_completion', created: 1675036608, model: 'text-davinci-003', choices: [Array], usage: [Object] } }i don’t see the text of the answer

-

@sdetweil Hey any updates on this?

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (either via email, or push notification). You'll also be able to save bookmarks and upvote posts to show your appreciation to other community members.

With your input, this post could be even better 💗

Register Login