Read the statement by Michael Teeuw here.

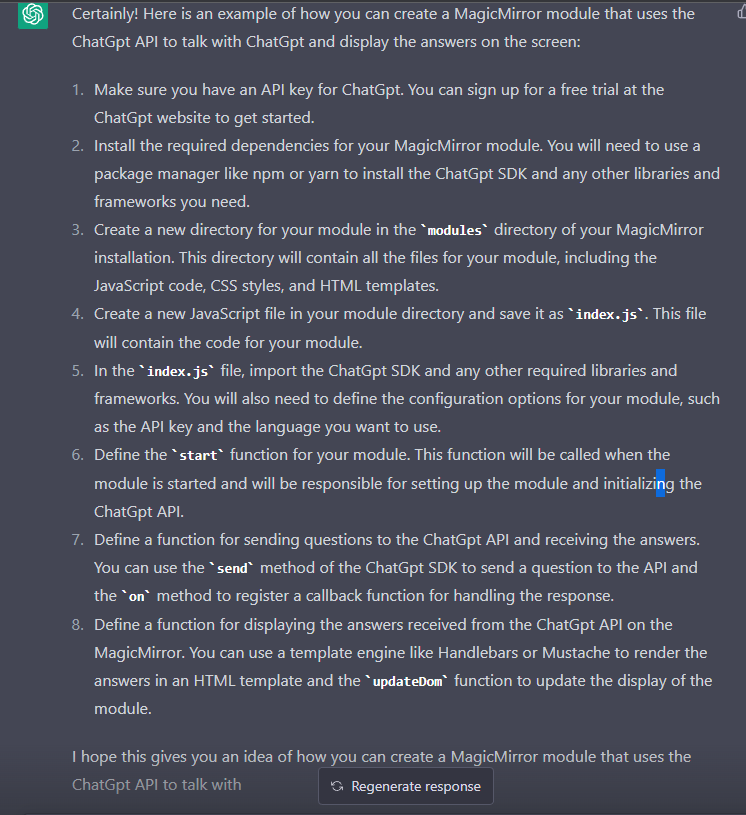

ChatGpt intergration

-

@SILLEN-0 the data back from the api looks like this

status: 200, statusText: 'OK', headers: { date: 'Sun, 29 Jan 2023 23:56:49 GMT', 'content-type': 'application/json', 'content-length': '343', connection: 'close', 'access-control-allow-origin': '*', 'cache-control': 'no-cache, must-revalidate', 'openai-model': 'text-davinci-003', 'openai-organization': 'user-ieyrbalt3azhd9sd82splktg', 'openai-processing-ms': '2790', 'openai-version': '2020-10-01', 'strict-transport-security': 'max-age=15724800; includeSubDomains', 'x-request-id': 'd341b29147db89ee20886107e22b41a4' }, config: { transitional: [Object], adapter: [Function: httpAdapter], transformRequest: [Array], transformResponse: [Array], timeout: 0, xsrfCookieName: 'XSRF-TOKEN', xsrfHeaderName: 'X-XSRF-TOKEN', maxContentLength: -1, maxBodyLength: -1, validateStatus: [Function: validateStatus], headers: [Object], method: 'post', data: '{"model":"text-davinci-003","prompt":"What is the weather like today?","temperature":0.7}', url: 'https://api.openai.com/v1/completions' }, request: ClientRequest { _events: [Object: null prototype], _eventsCount: 7, _maxListeners: undefined, outputData: [], outputSize: 0, writable: true, destroyed: false, _last: true, chunkedEncoding: false, shouldKeepAlive: false, maxRequestsOnConnectionReached: false, _defaultKeepAlive: true, useChunkedEncodingByDefault: true, sendDate: false, _removedConnection: false, _removedContLen: false, _removedTE: false, _contentLength: null, _hasBody: true, _trailer: '', finished: true, _headerSent: true, _closed: false, socket: [TLSSocket], _header: 'POST /v1/completions HTTP/1.1\r\n' + 'Accept: application/json, text/plain, */*\r\n' + 'Content-Type: application/json\r\n' + 'User-Agent: OpenAI/NodeJS/3.1.0\r\n' + 'Authorization: Bearer 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\r\n' + 'Content-Length: 89\r\n' + 'Host: api.openai.com\r\n' + 'Connection: close\r\n' + '\r\n', _keepAliveTimeout: 0, _onPendingData: [Function: nop], agent: [Agent], socketPath: undefined, method: 'POST', maxHeaderSize: undefined, insecureHTTPParser: undefined, path: '/v1/completions', _ended: true, res: [IncomingMessage], aborted: false, timeoutCb: null, upgradeOrConnect: false, parser: null, maxHeadersCount: null, reusedSocket: false, host: 'api.openai.com', protocol: 'https:', _redirectable: [Writable], [Symbol(kCapture)]: false, [Symbol(kNeedDrain)]: false, [Symbol(corked)]: 0, [Symbol(kOutHeaders)]: [Object: null prototype], [Symbol(kUniqueHeaders)]: null }, data: { id: 'cmpl-6eBpQkPEmW4z1PoxRTTprs8Lhoqn4', object: 'text_completion', created: 1675036608, model: 'text-davinci-003', choices: [Array], usage: [Object] } } answer: { status: 200, statusText: 'OK', headers: { date: 'Sun, 29 Jan 2023 23:56:49 GMT', 'content-type': 'application/json', 'content-length': '343', connection: 'close', 'access-control-allow-origin': '*', 'cache-control': 'no-cache, must-revalidate', 'openai-model': 'text-davinci-003', 'openai-organization': 'user-ieyrbalt3azhd9sd82splktg', 'openai-processing-ms': '2790', 'openai-version': '2020-10-01', 'strict-transport-security': 'max-age=15724800; includeSubDomains', 'x-request-id': 'd341b29147db89ee20886107e22b41a4' }, config: { transitional: [Object], adapter: [Function: httpAdapter], transformRequest: [Array], transformResponse: [Array], timeout: 0, xsrfCookieName: 'XSRF-TOKEN', xsrfHeaderName: 'X-XSRF-TOKEN', maxContentLength: -1, maxBodyLength: -1, validateStatus: [Function: validateStatus], headers: [Object], method: 'post', data: '{"model":"text-davinci-003","prompt":"What is the weather like today?","temperature":0.7}', url: 'https://api.openai.com/v1/completions' }, request: ClientRequest { _events: [Object: null prototype], _eventsCount: 7, _maxListeners: undefined, outputData: [], outputSize: 0, writable: true, destroyed: false, _last: true, chunkedEncoding: false, shouldKeepAlive: false, maxRequestsOnConnectionReached: false, _defaultKeepAlive: true, useChunkedEncodingByDefault: true, sendDate: false, _removedConnection: false, _removedContLen: false, _removedTE: false, _contentLength: null, _hasBody: true, _trailer: '', finished: true, _headerSent: true, _closed: false, socket: [TLSSocket], _header: 'POST /v1/completions HTTP/1.1\r\n' + 'Accept: application/json, text/plain, */*\r\n' + 'Content-Type: application/json\r\n' + 'User-Agent: OpenAI/NodeJS/3.1.0\r\n' + 'Authorization: Bearer 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\r\n' + 'Content-Length: 89\r\n' + 'Host: api.openai.com\r\n' + 'Connection: close\r\n' + '\r\n', _keepAliveTimeout: 0, _onPendingData: [Function: nop], agent: [Agent], socketPath: undefined, method: 'POST', maxHeaderSize: undefined, insecureHTTPParser: undefined, path: '/v1/completions', _ended: true, res: [IncomingMessage], aborted: false, timeoutCb: null, upgradeOrConnect: false, parser: null, maxHeadersCount: null, reusedSocket: false, host: 'api.openai.com', protocol: 'https:', _redirectable: [Writable], [Symbol(kCapture)]: false, [Symbol(kNeedDrain)]: false, [Symbol(corked)]: 0, [Symbol(kOutHeaders)]: [Object: null prototype], [Symbol(kUniqueHeaders)]: null }, data: { id: 'cmpl-6eBpQkPEmW4z1PoxRTTprs8Lhoqn4', object: 'text_completion', created: 1675036608, model: 'text-davinci-003', choices: [Array], usage: [Object] } }i don’t see the text of the answer

-

@sdetweil Hey any updates on this?

-

@sdetweil Do you have any updates regarding the speech capture process?

-

@PraiseU I wasn’t working on any speech capture integration.

I outlined the possibilities

-

@sdetweil Oh okay! I found this Module https://github.com/TheStigh/MMM-VoiceCommander. Do you think it can be useful? or does it still have the problem with accuracy?

-

@PraiseU said in ChatGpt intergration:

@sdetweil Oh okay! I found this Module https://github.com/TheStigh/MMM-VoiceCommander. Do you think it can be useful? or does it still have the problem with accuracy?

yes, it is built on mmm-voice

-

@sdetweil Can you shed some light on what you mean by this “this is why the MMM-GoogleAssistant provides mechanisms (recipe) to use the captured text for non- google uses” And how can i approach this? Thanks a lot

-

@PraiseU it’s exactly what it says.

GoogleAssistant collects text from voice.

but many voice commands are not directed at Google itself. ($search, play, turn on/off lights…)so, if you write a recipe you can direct the text to other purposes

-

@sdetweil Oh Okay so If I was to say “GPT Explain software engineering” It will get the text and I can forward it to the module it needs to go to? Do you ahave an examples that can help?

-

@PraiseU i mentioned how to do that before